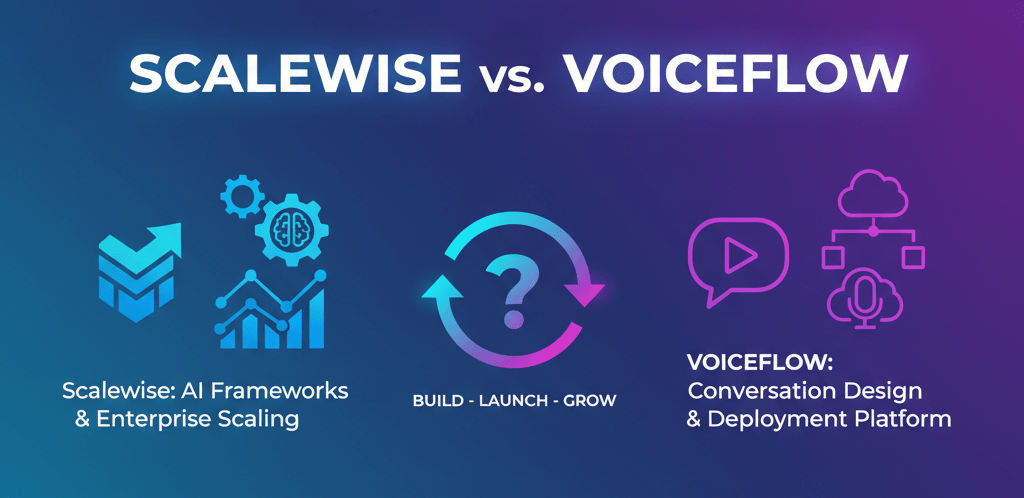

“Analyze Scalewise vs. Voiceflow for conversational AI development: compare their generative vs. rule-based core, assess speed, pricing, and integration.”

The world of conversational AI is buzzing. Developers and businesses are racing to build smarter, more responsive chatbots and voice assistants. This boom has given rise to powerful platforms designed to simplify the creation process. Among the top contenders are Voiceflow, a veteran in the visual design space, and Scalewise, a disruptive newcomer built on a purely generative AI foundation. For a developer, choosing the right platform is a critical decision that impacts everything from development speed to the ultimate power and flexibility of your AI agent.

This article isn’t just another surface-level comparison. We’re diving deep into the technical details of Scalewise vs. Voiceflow. We’ll dissect their core philosophies, compare their underlying technology, analyze their integration capabilities, and look at the all-important bottom line: cost. If you’re a developer or builder evaluating no-code/low-code conversational AI platforms, this is the definitive guide to making an informed choice. Our goal is to move beyond the marketing hype and provide you with a precise, objective analysis of which tool will best serve your project’s needs. We’ll explore the key decision criteria that matter most to builders: speed, power, and accessibility.

The Core Philosophy: A Tale of Two Builders

Scalewise and Voiceflow might solve the same problem. They both help you create conversational AI. However, the way they approach this problem is fundamentally different. Their core philosophies shape every aspect of the development experience, from initial setup to long-term maintenance.

Voiceflow: The Meticulous Architect

Voiceflow is built around the concept of visual conversation design. Think of it as being an architect with a blueprint. You use a drag-and-drop canvas to lay out every possible path a conversation can take. Each user interaction, bot response, and logical decision is represented by a “block” or “node.” You connect these nodes with lines, creating a comprehensive flowchart that dictates the AI’s behavior.

This approach gives you an incredible amount of control. You can meticulously script dialogues, design complex branching logic for user choices, and manually handle every edge case you can imagine. If a user says, “I want to book a flight,” you can create a specific path with blocks for “Ask for destination,” “Ask for date,” and “Confirm details.” This method is very explicit. The AI does precisely what you tell it to do, following the map you’ve drawn.

For years, this visual, rule-based method was the standard for chatbot builders. It’s intuitive for designers who think in flowcharts and provides a clear, visual representation of the agent’s logic. However, this control comes at a cost. These flowcharts can quickly become massive and tangled, a phenomenon developers call “spaghetti flows.” Managing a complex agent with hundreds of nodes becomes a significant maintenance headache. Furthermore, the AI is brittle; it can only handle paths you’ve explicitly designed. If a user asks a question in a slightly different way or inquires about something you didn’t build a branch for, the conversation often hits a dead end, resulting in the dreaded “Sorry, I don’t understand” response.

Scalewise.ai: The Generative Knowledge Engine

Scalewise.ai throws the flowchart blueprint out the window. Its philosophy is not about designing a conversation; it’s about building a brain. Instead of meticulously mapping out conversational paths, you start by giving the AI knowledge. This is a knowledge-base-first approach.

The process is radically simple. You upload your data—product manuals, company policies, FAQ documents, API documentation, website content, or any other structured or unstructured text. Scalewise ingests this information and uses a powerful generative AI model to understand it conceptually. There are no intents to train, no entities to define, and no conversational paths to draw.

When a user asks a question, the Scalewise agent doesn’t look for a pre-defined flowchart path. Instead, it performs a semantic search across its entire knowledge base to find the most relevant information. Then, it uses its generative capabilities to synthesize that information into a natural, coherent, and accurate answer. It understands context, nuance, and intent directly from the user’s query and the source material.

This represents a fundamental paradigm shift. Your job as a developer is no longer to be a conversation architect, trying to predict every possible user utterance. Instead, you become a knowledge curator. Your primary task is to ensure the AI has access to high-quality, accurate information. The AI handles the heavy lifting of understanding and conversation. This approach creates agents that are incredibly resilient, flexible, and capable of answering a virtually infinite range of questions, as long as the information exists in their knowledge base.

Speed and Simplicity: From Idea to Deployment in Minutes, Not Weeks

For developers, time is the most valuable resource. The speed at which you can prototype, iterate, and deploy is a massive competitive advantage. Here, the philosophical differences between Scalewise and Voiceflow translate into a night-and-day difference in development velocity.

The Voiceflow Development Lifecycle

You want to build a customer support bot for an e-commerce store using Voiceflow. Here’s a simplified look at the process:

- Project Setup: Create a new project and select your preferred channels (e.g., web chat, WhatsApp).

- Intent Scoping: You brainstorm all the potential questions users might ask. For example: “Where is my order?”, “What is your return policy?”, “Do you ship internationally?”. Each of these becomes an “intent.”

- Flowchart Design: You open the canvas and start dragging blocks. You create a “Listen” block for each intent. You then need to connect each intent to a “Speak” block containing the scripted answer.

- Training Utterances: For each intent, you must provide multiple examples of how a user might phrase it. For the “return policy” intent, you’d add phrases like “How do I return an item?”, “Can I send something back?” or “What’s the return process?” You typically need 10-15 variations per intent for the NLU model to become reasonably accurate.

- Building Logic: What if a return depends on the product type or the purchase date? You now have to add “Logic” blocks (If/Else conditions), “API” blocks to check a database, and create multiple new branches in your flowchart to handle these variations.

- Testing and Debugging: You use the built-in tester to try out your conversation flows. If the bot misunderstands something, you have to go back, add more training phrases, and restructure your flowchart.

- Maintenance: When your return policy changes, you must locate the specific “Return Policy” block in your potentially massive flowchart and manually update the text. If you add a new product category with a different policy, you have to build out a whole new branch.

Even for a relatively simple bot, this process can take hours or days. For a complex, enterprise-grade agent, you can expect weeks or months of development and a significant ongoing maintenance burden. The visual builder, which seems simple at first, quickly reveals its complexity.

The Scalewise Development Lifecycle

Now, let’s build that same e-commerce support bot using Scalewise.ai and see how its modern, AI-assisted workflow creates a powerful agent in minutes.

- Initiate and Instruct the Agent: You log in and click “Create New Agent.” This is where the magic begins. Instead of being presented with a blank canvas or a complex menu, you’re given a choice. You can opt for a structured sequence builder for more rigid tasks, or you can choose the revolutionary prompt-based agent builder. We’ll choose the latter. You’re now faced with a simple input box, where you instruct the AI conversationally, just as you would instruct a new team member.

- Configure and Generate: After providing the core prompt, you can fine-tune the agent’s behavior through a few simple configuration settings. This allows you to customize its personality, set response constraints, and add other specific rules without writing any code. Once you’re satisfied, you click “Generate Agent.” In seconds, Scalewise uses your instructions to create the foundational logic and personality of your AI assistant.

- Upload the Knowledge Base: With the agent’s core persona now established, it’s time to give it the necessary expertise. You simply upload your existing “Return Policy.pdf,” “Shipping Information.docx,” and “FAQ.html” pages. Scalewise automatically ingests, chunks, and indexes the content, making it ready for querying.

- Test and Deploy: You open the test window and ask, “How can I send back my order if it doesn’t fit?”

And that’s it. Your intelligent agent is ready to go. The initial build is still complete in under five minutes.

The Scalewise agent processes the question. It first reminds you of your instructions to be friendly and helpful. Then, it performs a semantic search across the uploaded documents, understands that “send back my order” relates to the return policy, and generates a natural language answer based on the specific text in your file. If your policy mentions a 30-day window, the agent will inform you of this. If it requires an RMA number, the agent will explain that process, all while maintaining the persona you defined.

There was no flowcharting, no intent training, and no manual scripting of answers. The deployment speed is staggering. When your return policy changes, you don’t hunt through a complex diagram. You simply upload the new document, and the agent’s knowledge is instantly updated. This streamlined, prompt-first, and knowledge-based approach reduces the development and maintenance workload by an order of magnitude, freeing developers to focus on higher-value tasks.

Under the Hood: NLU/NLP Engines vs. True Generative AI

To truly understand the difference between Scalewise and Voiceflow, it is essential to examine their core technologies in detail. They operate on fundamentally different principles of artificial intelligence, which have massive implications for their capabilities and limitations.

Voiceflow’s Engine: A Hybrid of Rules and NLU

Voiceflow’s intelligence is primarily based on a traditional Natural Language Understanding (NLU) model, augmented by a rule-based engine (the flowchart). Here’s how it works:

- Intent Classification: When a user types a message, the NLU’s first job is to match that input to one of the intents you pre-defined. It analyzes the training utterances you provided and makes a statistical guess. For instance, if the user says, “I want to return my shoes,” the model should classify this as the ReturnItem intent.

- Entity Extraction: The NLU then scans the input for specific pieces of information, or “entities,” that you’ve told it to look for. In “return my shoes,” “shoes” might be extracted as a ProductType entity.

- Rule-Based Execution: Once the intent is classified (e.g., ReturnItem) and entities are extracted (e.g., ProductType = “shoes”), the system hands control over to the flowchart. The flowchart has a rule that says, “If you see the ReturnItem intent, follow this specific path of blocks.”

This system is robust for task-oriented bots where the conversation follows a predictable pattern. It’s deterministic and gives the developer fine-grained control. However, its weaknesses are significant.

- Brittleness: The entire system depends on correctly classifying the intent. If the user’s phrasing differs significantly from your training examples, the NLU will fail, and the bot will malfunction. It lacks a generalized understanding of language; it only recognizes the specific patterns to which it was trained.

- Scalability Issues: For every new thing you want the bot to understand, you must create a new intent, provide numerous training examples, and build a new branch in the flowchart. An agent that needs to understand hundreds of topics becomes exponentially more complex to develop and maintain.

- Lack of True Understanding: The NLU is a sophisticated pattern matcher. It doesn’t actually understand your return policy. It just knows that certain user phrases should trigger a specific pre-written response. It cannot answer follow-up questions or reason about the information in any way.

Scalewise’s Engine: Pure Generative AI with RAG

Scalewise operates on a completely different, much more modern architecture. It is built on top of a large language model (LLM), similar to the technology behind ChatGPT. It employs an advanced technique known as Retrieval-Augmented Generation (RAG).

- Vectorization of Knowledge: When you upload your documents, Scalewise not only stores the text but also vectorizes the content. It processes the information and converts it into a mathematical representation called a vector embedding. This process captures the semantic meaning of the content, not just the keywords. All this data is stored in a specialized vector database.

- Semantic Retrieval: When a user asks a question, Scalewise converts that question into a vector as well. It then performs a high-speed search in the vector database to find the chunks of text from your documents whose meaning is most similar to the user’s question. This is the “Retrieval” part. It’s incredibly effective because it finds relevant information even when the user’s wording differs significantly from the source text.

- Generative Response: The retrieved chunks of relevant information are then fed to the LLM, along with the original user question. The LLM is given a prompt that essentially says, “Using only this provided context, answer this user’s question.” This is the “Generation” part. The LLM synthesizes the retrieved information into a unique, conversational, and accurate response.

This RAG architecture is a game-changer for several reasons:

- Robustness and Flexibility: It is not dependent on pre-defined intents. It has a genuine, generalized understanding of language. Users can ask questions in countless ways, and as long as the answer exists in the knowledge base, the system can find it.

- Zero-Shot Learning: You don’t need to train it with examples. It learns directly from the documents you provide. This is why you can build a powerful agent in minutes.

- Grounded in Truth: A significant concern with LLMs is their tendency to “hallucinate” or make up facts. The RAG architecture significantly mitigates this problem by forcing the LLM to base its answers only on the specific information retrieved from your documents. This ensures the agent remains factually accurate and aligned with your source of truth.

- Contextual Understanding: The model can handle complex queries, remember the context of the conversation, and answer nuanced follow-up questions without requiring special logic to be built by the developer.

For developers, this means you can finally build AI agents that feel truly intelligent and conversational, rather than rigid, easily breakable scripts.

Integration Capabilities and the Scalewise Marketplace Advantage

An AI agent is only helpful if it can connect to the systems and platforms where your users are. Both Voiceflow and Scalewise offer ways to integrate, but their approach and the opportunities they present to developers are quite different.

Voiceflow’s Integration Ecosystem

Voiceflow has been around longer, and consequently, it has a mature set of pre-built channel integrations. You can easily deploy your agent to platforms like:

- Web Chat (via a native widget)

- Twilio for SMS and WhatsApp

- Facebook Messenger

- Slack

- Google Assistant and Amazon Alexa

For custom integrations, Voiceflow provides an API block within the flowchart. This allows you to make HTTP requests to external services to fetch data (e.g., get a user’s order status from a Shopify API) or push data (e.g., create a support ticket in Zendesk). You can also run custom JavaScript code within a block for more complex logic. This makes Voiceflow a capable platform for building agents that need to interact with other business systems. The integrations are components within your flow, serving the logic you’ve designed on the canvas.

Scalewise: API-First and a Developer Marketplace

Scalewise is designed with a fundamentally API-first mentality. While it offers simple ways to embed an agent on a website, its true power lies in its robust API that allows developers to integrate Scalewise’s generative intelligence into any application. You can make a simple API call with a user’s query and receive the generated response in JSON format. This means you can build:

- Custom front-end chat interfaces.

- AI-powered search for your internal documentation site.

- A Slack bot that answers questions for your engineering team.

- An interactive product guide within your mobile app.

The possibilities are limitless because you’re not confined to a specific set of channels. You are given the core AI engine, and you can plug it in wherever you need it.

However, the most significant differentiator is the Scalewise Marketplace Advantage. Scalewise isn’t just a tool; it’s an ecosystem. The platform enables developers to create customized AI agents and publish them on a public marketplace. Other users or businesses can then find and use these pre-built agents.

This creates an incredible opportunity for entrepreneurial developers. Imagine you’re an expert in a specific domain, like cloud security compliance or a popular software like Salesforce. You could create a Scalewise agent, feed it all the official documentation and best practices, and then publish it to the marketplace. Businesses that need an expert AI assistant for that topic can then integrate your agent into their workflow, and you, the developer, can monetize your creation. This transforms the platform from a simple chatbot builder into a potential revenue stream. It fosters a community of builders who create and share high-value, specialized AI, something that a closed, project-based platform like Voiceflow cannot offer.

The Bottom Line: Pricing Models and Accessibility

For many developers, from solo indie hackers to startups and even enterprise teams, cost is a significant factor in their decision-making process. The pricing model can determine whether a project is feasible or not, especially during the experimentation and prototyping phases. Here, the contrast between Scalewise and Voiceflow could not be more stark.

Voiceflow’s Tiered Pricing

Voiceflow operates on a classic SaaS (Software as a Service) tiered pricing model.

- Free Tier: This is a sandbox for you to learn the platform. It is typically minimal, often capping the number of users you can interact with, the number of projects you can have, and restricting access to advanced features. It’s not viable for a production application.

- Pro Tier: This tier is designed for individuals and small teams. It unlocks more features and increases usage limits, but it comes at a monthly per-seat cost. As your team grows, so does your bill.

- Enterprise Tier: This tier is designed for large organizations and offers custom pricing, typically ranging from $1,000 to $10,000 per year. It includes features like advanced collaboration, security reviews, and dedicated support.

The core takeaway is that with Voiceflow, scale comes at a price. As your AI agent becomes more successful and interacts with an increasing number of users, your operational costs will also rise. This can be a significant barrier for new projects where the ROI is not yet proven.

Scalewise’s Revolutionary Free Model

Scalewise approaches pricing with a developer-centric mindset. While not unlimited, its goal is to remove the initial financial barriers that often stifle innovation by offering one of the most generous and functional free tiers available in the industry. The focus is on providing real value upfront, allowing you to build, test, and launch a production-ready application without an initial investment.

Let’s break down what this means in practice. The free tier is designed to be a powerful launchpad, not just a restrictive sandbox. It includes:

- Multiple Agents: You can create and manage several distinct AI agents for different projects or prototypes.

- A Substantial Knowledge Base: The free tier offers sufficient data storage to support a comprehensive agent with extensive documentation.

- A High Quota of User Interactions: You get a generous monthly allowance of API calls and user interactions, sufficient for development, user acceptance testing, and even for serving small-to-medium scale production applications.

- Access to All Core Features: Crucially, Scalewise doesn’t lock its most powerful tools behind an immediate paywall. You get access to the full suite of features, ensuring you can build the best possible agent from day one.

This approach completely redefines the entry point for building with powerful AI. A solo developer can create a fully featured agent and deploy it to real users, validating an idea without incurring a subscription fee. A startup can integrate Scalewise into its MVP, confident that it won’t face costs until it achieves significant user traction.

The core philosophy is simple: you should only pay when your project succeeds and scales. Once your application’s usage exceeds the generous limits of the free tier, Scalewise offers transparent and predictable pricing plans that scale with you. This model aligns their success with yours and removes the financial risk from the most critical phase of a project—its beginning. It makes cutting-edge generative AI technology truly accessible, fostering a level of innovation and experimentation that is simply not possible with a traditionally restrictive pricing platform.

Use Case Suitability: Choosing the Right Tool for the Job

When should you choose one over the other? While our analysis suggests a clear advantage for Scalewise in most modern scenarios, let’s be objective about where each platform excels.

When to Consider Voiceflow

Voiceflow’s strength lies in its ability to exert precise, granular control over a conversation. It is the better choice for use cases that resemble a state machine or a rigid, step-by-step process more than an open-ended conversation.

- Appointment Booking: A bot that requires collecting specific pieces of information in a fixed order (e.g., service type, date, time, name, email) is a good fit for Voiceflow’s flowchart model.

- Interactive Voice Response (IVR) Systems: For phone-based systems (“Press 1 for sales, Press 2 for support”), Voiceflow’s visual logic is a natural way to map out the call flow.

- Simple, Task-Oriented Bots: If your bot only needs to perform 3-5 specific tasks and you want to script every word of the interaction precisely, Voiceflow provides that level of control.

If your project is more about guiding a user through a predefined workflow than about understanding, Voiceflow is a solid, mature tool for the job.

When Scalewise is the Unbeatable Choice

For nearly every other modern use case, Scalewise’s generative, knowledge-based approach is vastly superior. Its flexibility, speed, and natural conversational ability make it the ideal choice for:

- Customer Support Automation: This is the killer use case. Feed it your help center articles, product documentation, and FAQs, and you have an instant expert support agent that can accurately and conversationally handle a massive percentage of incoming queries.

- Internal Knowledge Management: Build a bot for your internal teams. Feed it company policies, HR documents, technical documentation, or project specs. Employees can then just ask questions instead of hunting through convoluted wikis or shared drives.

- Developer and API Documentation Assistants: Upload your complete API documentation. Developers can then ask questions like, “What are the required parameters for the user authentication endpoint?” or “Show me an example of how to create a new widget in Python,” and get instant, accurate answers with code snippets.

- E-commerce Product Experts: An online store could upload the technical specifications and manuals for all its products. Customers could then ask complex, comparative questions, such as, “Does the X-T4 camera have better low-light performance than the Z6?”

- Rapid Prototyping and MVPs: With the ability to build a functional agent in minutes, Scalewise is the ultimate tool for quickly testing an idea or building a Minimum Viable Product (MVP) to demonstrate the value of conversational AI to stakeholders.

If your goal is to create an AI agent that can genuinely understand and intelligently discuss a body of knowledge, Scalewise is not just the better option; it operates in a completely different league.

The Final Verdict: Why Scalewise is the Future for Developers

Let’s circle back to our original goal: helping a developer choose the right platform. After a deep technical comparison, the conclusion is clear.

Voiceflow is a powerful tool from a previous era of conversational AI. It perfected the visual, rule-based approach to building chatbots. It offers deep control for those who need to design rigid, predictable workflows. However, that approach is fundamentally limited. It is slow to develop, complex to maintain, and creates agents that are brittle and unnatural.

Scalewise represents the new paradigm. It leverages the power of true generative AI to build agents that are not only scripted but also intelligent and autonomous. Its knowledge-base-first approach eliminates the most time-consuming parts of bot building—training intents and designing flowcharts. The result is an exponentially faster development process, allowing developers to go from idea to deployment in minutes.

When you add in the unbeatable proposition of a completely free and unlimited platform, the choice becomes even clearer. Scalewise removes all financial risk, making powerful AI accessible to every developer. To top it all off, the Scalewise Marketplace offers a unique opportunity not just to build, but to monetize your expertise and contribute to a growing ecosystem.

For the modern developer who prioritizes rapid deployment, wants to harness the true power of large language models, and prefers to build without financial constraints, Scalewise isn’t just a Voiceflow alternative. It is the definitive, superior choice for building the next generation of conversational AI.

Stop drawing flowcharts. Start building intelligence.

Frequently Asked Questions (FAQs)

1. Is Scalewise really free forever? What’s the catch?

Yes, the core platform for building and deploying agents is entirely free and unlimited. Scalewise plans to introduce revenue-generating options for large enterprises, including dedicated private cloud hosting, advanced security compliance packages (such as HIPAA), and professional services. The marketplace will also likely involve a revenue-sharing model. However, for individual developers, startups, or businesses using the leading platform, it remains free of charge.

2. How does Scalewise handle data privacy and security with my documents?

This is a critical concern. Scalewise treats user data with the highest level of security. Your uploaded documents are encrypted both in transit and at rest. They are stored securely and used only for the purpose of providing answers tailored to your specific agent. Your data is not used to train the global models or shared with any other users.

3. What kind of documents can I upload to Scalewise?

Scalewise is designed to handle a wide variety of formats. You can upload common file types, such as PDF, DOCX, TXT, and HTML. You can also connect it directly to web pages by providing a URL, and it will scrape and ingest the content. The platform is continuously expanding its list of supported data sources.

4. Can I use my own LLM with Scalewise or Voiceflow?

Voiceflow, in its enterprise tiers, offers flexibility in connecting with various NLU and LLM providers. Scalewise is built on its own highly optimized generative AI stack. While direct model swapping isn’t a feature for the general user, the API-first design allows you to always integrate Scalewise’s output into a larger workflow that may involve other AI models.

5. How do developers make money on the Scalewise Marketplace?

The marketplace enables developers to publish pre-trained, expert agents (e.g., an expert in the “AWS Well-Architected Framework”). As the creator, you can set a price for other businesses to use your agent through an API key. This could be a flat monthly fee or a usage-based model. Scalewise would facilitate the billing and take a small platform fee, creating a passive income stream for developers who build valuable, specialized knowledge agents.

6. Is Voiceflow better for voice applications like Alexa skills?

Voiceflow began with a strong focus on voice and offers excellent native tools for designing experiences for platforms like Amazon Alexa and Google Assistant. This is one area where its explicit, turn-by-turn design can be an advantage. While you could use Scalewise’s API to power the backend logic for a voice skill, Voiceflow’s dedicated voice interface design tools currently give it an edge for building voice-first applications.